YouTube Wants to Pay Record Labels to Create Soulless Versions of Your Favorite Artists

The largest AI chatbot companies are sprinting around, trying to make multi-million dollar deals with news networks and Reddit to train their AI models with human-made content—legally. Now, Google-owned YouTube wants to ink similar deals for its existing AI music replication software—a new report details how the video platform is trying to court major labels.

The record labels in question include Sony, Warner, and Universal, according to a report from The Financial Times based on anonymous sources. The latter two already have deals with YouTube to expand, but Sony would be a newcomer to Google’s music-cloning ambitions.

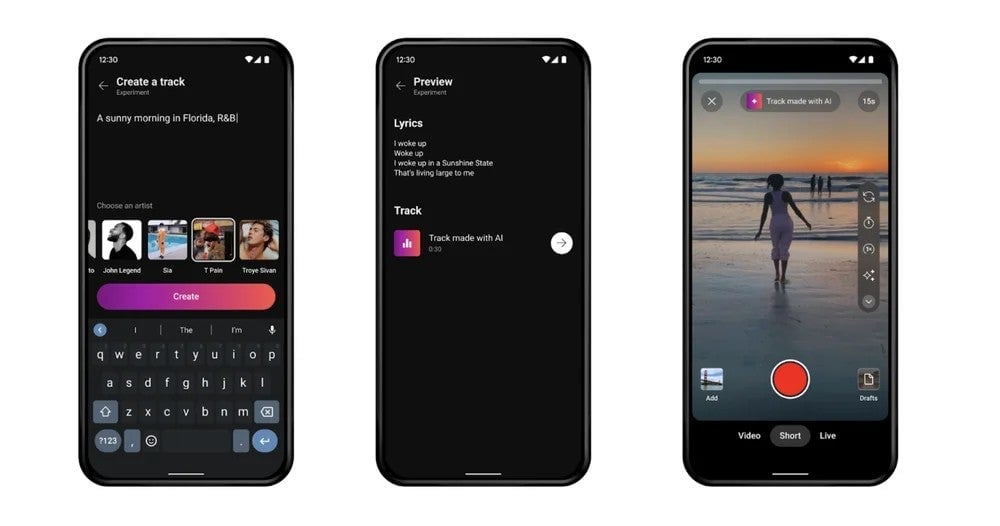

This would give the streamer access to more artists it could use to train the company’s existing and future AI music generators. Late last year, the tech giant rolled out Dream Track, an “experimental” program in YouTube Shorts that lets users generate short tracks with the voices of artists like T-Pain or John Legend.

The program and underlying AI model dubbed Lyria were developed by Google DeepMind. Since then, the AI-centric subsidiary has created more tools to create soundtracks based on text or video prompts.

YouTube told FT it “wasn’t looking to expand Dream Track” but confirmed it was “in conversations with labels about other experiments.”

We only know of nine artists who have signed up and allowed their voices to be cloned on Dream Track, including stars like Sia, Louis Bell, Demi Lovato, and Charlie Puth. That was back in November, and today, it remains unclear if any more artists have signed over their voices or are planning to. According to the FT report, YouTube’s deals wouldn’t cover all musicians under each label but would be licensing deals with one-off payments for specific artists.

Few have had early access to Dream Track, but those who have used it, like YouTuber Cleo Abram, noted that it limits requests to just 50 characters and removes any uncouth words from the prompt.

It remains unclear which major labels have taken the bait, but any hesitancy is in no small part due to the antagonism of artists themselves to having their voices cloned. YouTube itself updated its policies late last year to crack down on user-generated AI music deepfakes. The video service also noted it would allow labels to request takedowns of music like the ultra-popular fake Drake song “Heart on My Sleeve.”

Multiple major artists, including Billie Eilish and Nicki Minaj, signed an open letter against using their voices to train AI. Several of the world’s biggest labels, including Sony Music and Warner Music, sued two AI startups on Monday, alleging the companies took copyrighted music to train AI models without asking first.

These tracks are, ultimately, soulless. YouTube has advertised Dream Track as a way to generate some quick, easy background music for YouTube Shorts. That platform and other short-form video apps like TikTok are already filled to the brim with often-stolen video content dubbed over with AI commentary, and it seems like–in their current iteration–Dream Track is just another means of pumping out more lifeless, low-effort content.

Dream Track might not remain in its current state, or YouTube could craft some new AI music-generating tool, per FT’s sources. Either way, the generated songs must be as cut down and inoffensive as possible to avoid any trouble with the artists themselves. Not only will the music be derivative, but it will inevitably be without any real sense of artistry or intent, AKA more AI slop.