Google’s vision for Pixel 9 is great, but doesn’t escape my biggest problem with AI

I’ve still not found myself using generative AI regularly, even as Gemini takes over any and every Google product as of late. With the Pixel 9 series, AI is more important than ever, but I find myself frustrated by the same fundamental flaw in generative AI that even the Pixel 9 can’t escape.

This issue of 9to5Google Weekender is a part of 9to5Google’s rebooted newsletter that highlights the biggest Google stories with added commentary and other tidbits. Sign up here to get it delivered to your inbox early!

Since the beginning, but especially in light of using generative AI to “enhance” search, my problem with generative AI has always been that the technology is often confidently incorrect. You might get a correct response 10 times, but in my eyes that’s not worth it if you get one response later on that’s spitting out incorrect information as absolute fact.

That’s a problem generative AI, at least Google’s, still hasn’t really been able to fix.

Confident misinformation is the stumbling block that seems to affect Google’s AI ambitions more than others. One can’t think about this topic without “glue pizza” coming to mind.

On the Pixel 9 series, Google is presenting AI front and center, and even after a mere week of use, I’d already run into multiple instances of this confident misinformation reminding me why I barely ever use AI tools.

One example from this week was an afternoon meeting. The restaurant I was supposed to meet at was closed at the time we decided to meet (around 3pm), so on the way, I asked for other options nearby that first location that were actually open. The prompt specifically was “can you show me other restaurants that are open near [insert restaurant name]?”

Gemini instead spat out the location I’d literally just mentioned.

When I corrected the AI, asking for options that were open, it just repeated the same spot. I then reiterated that the location listed was closed, to which it, guess what, said the same thing again.

Meanwhile, 20 seconds opening and searching in Google Maps gave me the answer I needed with a whole lot less frustration.

The whole point of generative AI in an assistant is supposed to be getting better results that can be reasoned, not just referenced. But Gemini continues to fail in this aspect far too often. In this example, it’s not that Gemini didn’t have the information available. In fact it even directly said the hours of the location. It just failed to use that information.

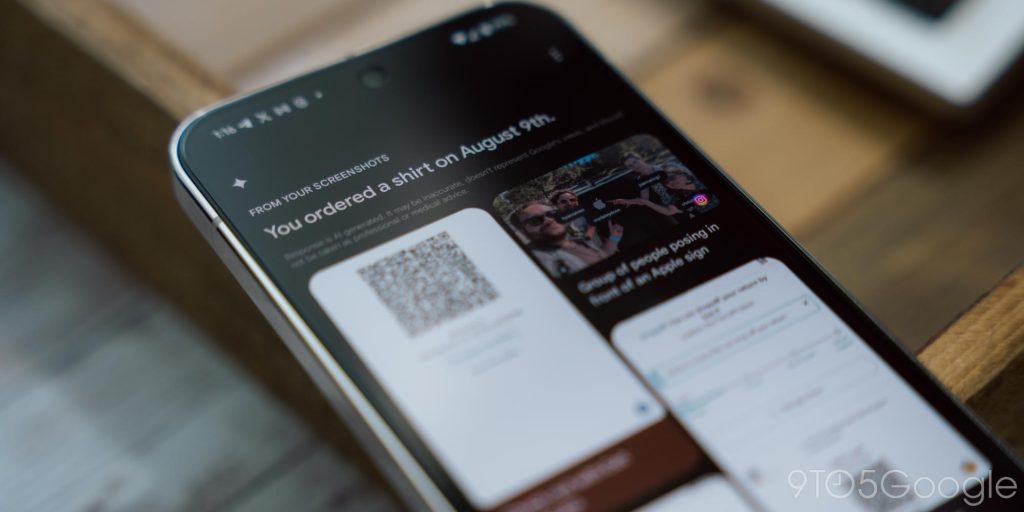

I found the same to be true within Pixel Screenshots.

One of the things I was excited about with this app was the ability to take screenshots of online orders and ask the AI to find estimated arrival dates, or find the date I’d placed an order. But It’s rather bad at that. For instance, I asked it to find the date I’d purchased a shirt – an order I placed on August 15 and had taken a screenshot of the checkout page – but it instead pulled up a completely unrelated Amazon return and claimed that I ordered a shirt on September 9, weeks in the future. Further attempts after I’d added more screenshots to the app weren’t any better. At one point it said I had ordered the shirt on August 19, the day I’d asked the question, while the screenshot below shows the app saying I’d made the order on August 9, days before I started using the phone and a date not referenced in any of the screenshots I’d taken.

This one is a little easier to forgive, though. The Pixel Screenshots app is solely using the information provided within the screenshots. It can’t leverage tracking numbers, it can’t read my email, so the information available to it is limited. Ultimately that’s fine by me. If it can’t do it, I get it. This is not particularly easy stuff!

The problem is that it confidently gives an answer that may be correct, or may be wildly misinformed.

This is why I simply do not see the appeal of things like Gemini Live. Google’s conversational AI assistant is undoubtedly impressive in how it speaks, how it reacts to what you’re saying. But that won’t stop it from spitting out wildly incorrect information that it presents as absolute fact. Heck, in his experience with the Pixel 9 this week, our Andrew Romero ran into an issue with Gemini Live where the AI was insistent that he was planning a trip to Florida even though he repeatedly said that wasn’t happening. That’s clearly a hallucination of the AI, but it really reinforces the lack of trust I have in these products.

Until these issues are solved, I think there’s just a gaping hole in Google’s vision for AI in the Pixel 9 series that tiny disclaimers absolutely cannot solve. We can argue about practicality and if anyone even wants these features, but the fact is they’re happening. I just want to be able to have some level of trust in them.

This Week’s Top Stories

Pixel 9 reviews

Following its announcement earlier this month, our first reviews of the Google Pixel 9 series are here. Stay tuned for a camera-focused look at the Pixel 9 Pro XL, a review of Pixel 9 Pro Fold, and reviews of Pixel Watch 3 and Buds Pro 2 coming soon.

More Top Stories

From the rest of 9to5

9to5Mac: AirPods Max 2 coming soon: Here’s what to expect

9to5Toys: Amazon officially announces its massive upcoming Prime Big Deal Days event for October

Electrek: Tesla Semi is spotted in Europe, but why?

Follow Ben: Twitter/X, Threads, and Instagram

FTC: We use income earning auto affiliate links. More.

Source: 9to5google.com